Where creativity meets innovation

ok

Oops! It’s a bit tight in here.

Try widening your browser window.

Where creativity meets innovation

AWS App Studio

Next-generation AI-powered low-code tool that empowers a new set of builders to create enterprise-grade applications in minutes.

view on aws.amazon.com

UX DES/ARCHITECT

0-1 PROJECT

PRODUCT STRATEGY

Starts with enterprise customers

Starts with enterprise customers

The idea originated from customers' request. We observed that many AWS enterprise customers frequently use mature low-code platforms like Mendix, OutSystems, Microsoft Power Apps, and ServiceNow to build solutions for their organizations. However, these platforms often lack seamless integration with AWS data and governance features. To address these gaps, the team decided to start a low-code project to offer a robust, integrated solution tailored to high-value enterprise use cases.

Integrates with AWS services seamlessly

Integrates with AWS services seamlessly

To meet the use case requirements of enterprise customers, App Studio aimed to deliver rich UI components, native connectors to AWS data, advanced automation workflows, and comprehensive application lifecycle management. The goal was to build a platform that seamlessly integrates with AWS services while providing a powerful and flexible environment for application development, with a target launch within 18 months.

My role

My role

Architect

I led the team that architected the builder experience, delivered an illustrative vision, ensuring it encompasses all the potential features.

Automation & code editor

I also focused on delivering features for the automation flow builder, parameters, and the low-code editor, while also conducting user research.

No-code veteran

As the most experienced no-code member of the team, I leveraged my past knowledge to help other teams accelerate their progress.

A little word before reading

Looking back over the past two years, it has been both challenging and exciting. Learning from multiple testing sessions, competitors, and real customers, our strategy has evolved significantly. Meanwhile, GenAI emerged in the middle of our journey unexpectedly, bringing a wealth of new perspectives to App Studio, and shaped our strategy moving forward.

This case study is about how I work through the IA for App Stuido. I have divided it into 4 parts, each covering a different phase of the evolution.

Phase One: Personas and use cases

TL;DR

Click to read the summary of this phase

# aws enterprise personas

To fully understand who we are building for, the whole team sat together for weeks to define our first set of personas and their usecases. Following the goals and the project proposal document written at an earlier stage, we chose to laser-focus on a sub-group of the AWS user base: Business developer.

# Admin | Builder | App user

To further identify the user journey and the spectrum of different roles throughout the building to publishing process, we dug deep into the personas and mapped out three critical roles for the product - Admin, Builder and App user.

I was tasked with leading the effort of building information architecture (IA) for the builder role specifically, these individuals would be spending the most time during the building process and they are the people we want to win over in the market.

My focus

My focus

My focus

My focus

# No-code <> Low-code

Now what does low-code mean compared to no-code? We acknowledged that any bespoke component, automation step, or data format has its limits, and as a productivity tool, we want to enable any many use case as possible. Therefore, the ability to write basic code, such as JavaScript, is necessary. On top of that, maintaining automations and data entities as working asset became a requirement as well to keep the functions and code easy to fix and debug.

# Canonical usecases

On the other hand, there was another question: what UI components, automation steps and data format should we be delivering? To help on this, I partnered with our researcher to run interview sessions with several Amazon internal teams that have strong use cases and have been using other low-code tools, such as Amazon Honeycode.

As a Honeycode veteran, seeing how people reacted to the new ideas gave me plenty of faith in this new product.

At the same time, I was also working with our visual designer to create quick mocks to showcase during the interviews. Based on these conversations and the mocks for the use cases we targeted, we were able to nail down the first patch of components.

Personas and use cases

# aws enterprise personas

To fully understand who we are building for, the whole team sat together for weeks to define our first set of personas and their usecases. Following the goals and the project proposal document written at an earlier stage, we chose to laser-focus on a sub-group of the AWS user base: Business developer.

# Admin | Builder | App user

To further identify the user journey and the spectrum of different roles throughout the building to publishing process, we dug deep into the personas and mapped out three critical roles for the product - Admin, Builder and App user.

I was tasked with leading the effort of building information architecture (IA) for the builder role specifically, these individuals would be spending the most time during the building process and they are the people we want to win over in the market.

# No-code <> Low-code

Now what does low-code mean compared to no-code? We acknowledged that any bespoke component, automation step, or data format has its limits, and as a productivity tool, we want to enable any many use case as possible. Therefore, the ability to write basic code, such as JavaScript, is necessary. On top of that, maintaining automations and data entities as working asset became a requirement as well to keep the functions and code easy to fix and debug.

# Canonical usecases

On the other hand, there was another question: what UI components, automation steps and data format should we be delivering? To help on this, I partnered with our researcher to run interview sessions with several Amazon internal teams that have strong use cases and have been using other low-code tools, such as Amazon Honeycode.

At the same time, I was also working with our visual designer to create quick mocks to showcase during the interviews. Based on these conversations and the mocks for the use cases we targeted, we were able to nail down the first patch of components.

Phase Two: Build fundamentals with engineering

Build fundamentals with engineering

TL;DR

"…how might we help builders develop an app easily?"

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

"…how might we guide builders to successfully create what they want?"

# IA = user journey

This exciting phase began with research of existing tools to understand their pros and cons. The aim was to draw insights on their modes, panels, and canvas structures to create a solid user journey for the builder for App Studio.

Connect to Content

Add layers or components to make infinite auto-playing slideshows.

Connect to Content

Add layers or components to make infinite auto-playing slideshows.

Connect to Content

Add layers or components to make infinite auto-playing slideshows.

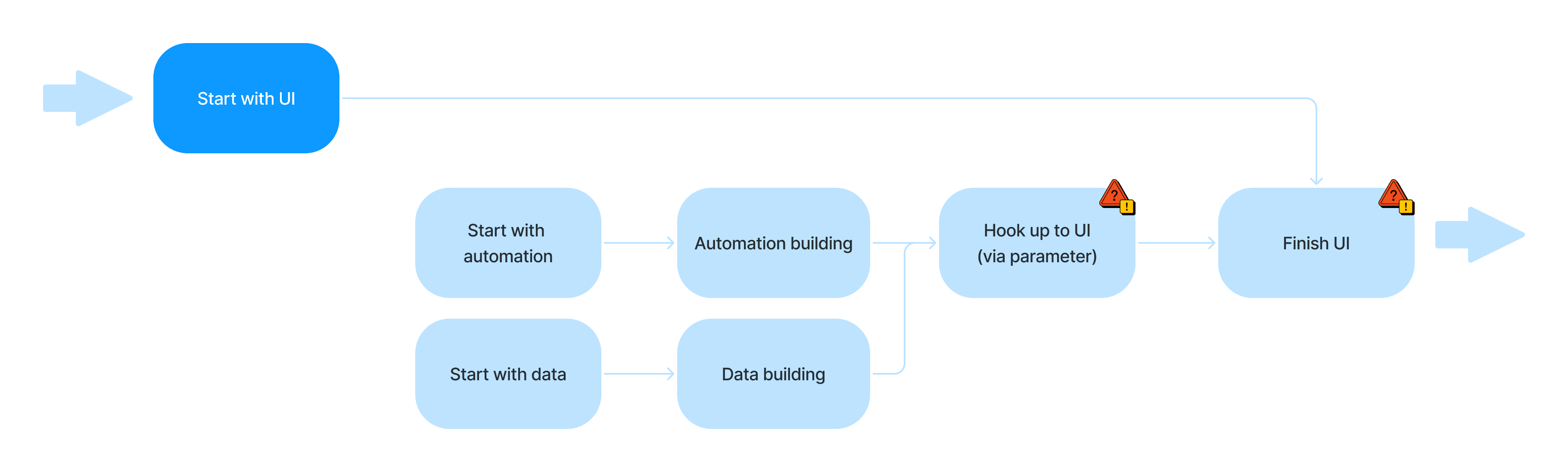

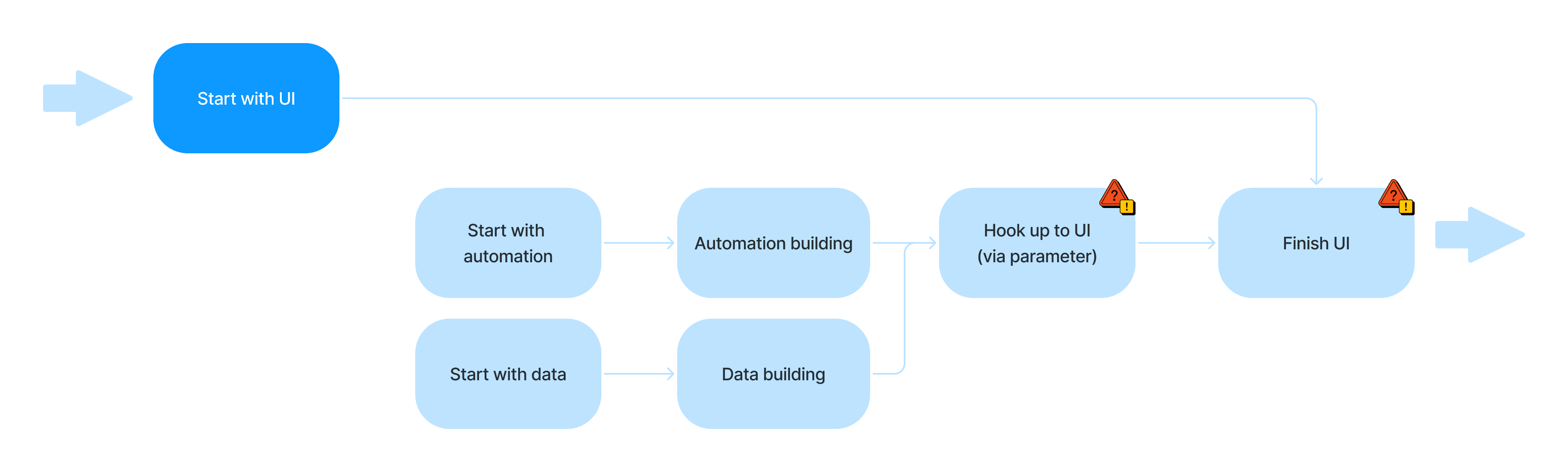

# UI-dominated <> parallel start points

Reflecting on the journey map, two distinct perspectives emerged, revealing two primary approaches regarding how the builder's journey should be structured.

UI-dominated

Parallel modes

Drawing from previous no-code experience, part of the team believed that the builder's goal is to create a usable interface.

The IA should be centered around the UI, beginning and ending with it.

Coming from Honeycode, I was biased toward this approach at this stage.

UI-dominated

Parallel modes

Drawing from previous no-code experience, part of the team believed that the builder's goal is to create a usable interface.

The IA should be centered around the UI, beginning and ending with it.

Coming from Honeycode, I was biased toward this approach at this stage.

UI-dominated

Drawing from previous no-code experience, part of the team believed that the builder's goal is to create a usable interface. The IA should be centered around the UI, beginning and ending with it.

Coming from Honeycode, I was biased toward this approach at this stage.

Parallel modes

Oppositely, another perspective suggested that we should not be opinionated about forcing users to start with UI.

For example, if someone wants to build a UI-less app, such as sending a Slack message every morning, they could simply build an automation flow and use it.

To validate if there was a preferred mental model from the user, I took a quick stab at mocking up the two versions and brought both into the conversations with customers.

# the layout

Meanwhile, from studying other platforms, we decided to adopt a common layout that includes a top-level header, a left panel for navigation, a working canvas, a right panel for configuration, and a utility toolbar.

UI-dominated

Parallel modes

UI-dominated approach keeps UI canvas anchored, allowing user to switch back easily.

I explored how the two approaches would utilize these panels and then presented both approaches to 10 participants, gathered detailed feedback, and gained valuable insights.

# compromise, decision making and iteration

Between the PM and me, we leaned towards the UI-dominated approach, as well as the testing showed more optimistic results for it. We then opened the conversation to the larger group and presented the concepts and research insights to our engineering partners.

However, we faced our first challenge.🤔 Considering we were only a few weeks into this new project and faced many unknowns, a less risky way to architect the product was to follow a typical software engineering team structure - aligning with the parallel modes approach. This would provide a more scalable and efficient development process.

However, we faced our first challenge.🤔 Considering we were only a few weeks into this new project and faced many unknowns, a less risky way to architect the product was to follow a typical software engineering team structure - aligning with the parallel modes approach. This would provide a more scalable and efficient development process.

An interesting theory I've learned

Conway's law:

Organizations which design systems (in the broad sense used here) are constrained to produce designs which are copies of the communication structures of these organizations.

We went back and evaluated the trade-offs and potential impacts, and the team reached a compromise that balanced our design vision with organizational goals. To mitigate the downsides of the parallel mode - such as frequent mode switching - I created new interactions, including a contextual button to switch back to the previous step. In addition, we decided to incorporate features of automation/query parameters.

# design system kits

Once the team reached an agreement, I went full throttle into production.

Partnering with design system peers, I published the UI kits on the Figma library. This provided each vertical triad team with a clear and consistent framework for building all upcoming features.

Mode Name

Add

Parent_name

Childern_name

Full height

56

280

40

.75

.75

.75

[Tree_nav]

<left_nav>

<left_panel>

Text

I am canvas

Text

This is an interactive prototype, try click around to check specs.

<canvas>

.75

.25

280

Full height

.75

.75

<right_panel>

Library

Component

Component

Component

Component

Component

Component

Drill in level 1 >

Edit

<error_panel>

36

.5

.5

Full width

56

56

Full width

1.25 rem

1.25 rem

.75

.75

.75

.75

<header>

Inventory management

Preview

Publish

S

Phase Two: Build fundamentals with engineering

TL;DR

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

# IA = user journey

This exciting phase began with research of existing tools to understand their pros and cons. The aim was to draw insights on their modes, panels, and canvas structures to create a solid user journey for the builder for App Studio.

# UI-dominated <> parallel start points

Reflecting on the journey map, two distinct perspectives emerged, revealing two primary approaches regarding how the builder's journey should be structured.

UI-dominated

Drawing from previous no-code experience, part of the team believed that the builder's goal is to create a usable interface. The IA should be centered around the UI, beginning and ending with it.

Coming from Honeycode, I was biased toward this approach at this stage.

Parallel modes

Oppositely, another perspective suggested that we should not be opinionated about forcing users to start with UI.

For example, if someone wants to build a UI-less app, such as sending a Slack message every morning, they could simply build an automation flow and use it.

To validate if there was a preferred mental model from the user, I took a quick stab at mocking up the two versions and brought both into the conversations with customers.

# the layout

Meanwhile, from studying other platforms, we decided to adopt a common layout that includes a top-level header, a left panel for navigation, a working canvas, a right panel for configuration, and a utility toolbar.

I explored how the two approaches would utilize these panels and then presented both approaches to 10 participants, gathered detailed feedback, and gained valuable insights.

UI-dominated

UI-dominated approach keeps UI canvas anchored, allowing user to switch back easily.

Parallel modes

Parallel modes approach benefits from having individual canvases, but switching between modes can be frictional.

# compromise, decision making and iteration

Between the PM and me, we leaned towards the UI-dominated approach, as well as the testing showed more optimistic results for it. We then opened the conversation to the larger group and presented the concepts and research insights to our engineering partners.

However, we faced our first challenge.🤔 Considering we were only a few weeks into this new project and faced many unknowns, a less risky way to architect the product was to follow a typical software engineering team structure - aligning with the parallel modes approach. This would provide a more scalable and efficient development process.

An interesting theory I've learned

Conway's law:

Organizations which design systems (in the broad sense used here) are constrained to produce designs which are copies of the communication structures of these organizations.

We went back and evaluated the trade-offs and potential impacts, and the team reached a compromise that balanced our design vision with organizational goals. To mitigate the downsides of the parallel mode - such as frequent mode switching - I created new interactions, including a contextual button to switch back to the previous step. In addition, we decided to incorporate features of automation/query parameters.

# design system kits

Once the team reached an agreement, I went full throttle into production.

Partnering with design system peers, I published the UI kits on the Figma library. This provided each vertical triad team with a clear and consistent framework for building all upcoming features.

Mode Name

Add

Parent_name

Childern_name

Full height

56

280

40

.75

.75

.75

[Tree_nav]

<left_nav>

<left_panel>

Text

I am canvas

Text

This is an interactive prototype, try click around to check specs.

<canvas>

.75

.25

280

Full height

.75

.75

<right_panel>

Library

Component

Component

Component

Component

Component

Component

Drill in level 1 >

Edit

<error_panel>

36

.5

.5

Full width

56

56

Full width

1.25 rem

1.25 rem

.75

.75

.75

.75

<header>

Inventory management

Preview

Publish

S

Phase Two: Build fundamentals with engineering

TL;DR

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

# IA = user journey

This exciting phase began with research of existing tools to understand their pros and cons. The aim was to draw insights on their modes, panels, and canvas structures to create a solid user journey for the builder for App Studio.

# UI-dominated <> parallel start points

Reflecting on the journey map, two distinct perspectives emerged, revealing two primary approaches regarding how the builder's journey should be structured.

UI-dominated

Drawing from previous no-code experience, part of the team believed that the builder's goal is to create a usable interface. The IA should be centered around the UI, beginning and ending with it.

Coming from Honeycode, I was biased toward this approach at this stage.

Parallel modes

Oppositely, another perspective suggested that we should not be opinionated about forcing users to start with UI.

For example, if someone wants to build a UI-less app, such as sending a Slack message every morning, they could simply build an automation flow and use it.

To validate if there was a preferred mental model from the user, I took a quick stab at mocking up the two versions and brought both into the conversations with customers.

# the layout

Meanwhile, from studying other platforms, we decided to adopt a common layout that includes a top-level header, a left panel for navigation, a working canvas, a right panel for configuration, and a utility toolbar.

I explored how the two approaches would utilize these panels and then presented both approaches to 10 participants, gathered detailed feedback, and gained valuable insights.

UI-dominated

UI-dominated approach keeps UI canvas anchored, allowing user to switch back easily.

Parallel modes

Parallel modes approach benefits from having individual canvases, but switching between modes can be frictional.

# compromise, decision making and iteration

Between the PM and me, we leaned towards the UI-dominated approach, as well as the testing showed more optimistic results for it. We then opened the conversation to the larger group and presented the concepts and research insights to our engineering partners.

However, we faced our first challenge.🤔 Considering we were only a few weeks into this new project and faced many unknowns, a less risky way to architect the product was to follow a typical software engineering team structure - aligning with the parallel modes approach. This would provide a more scalable and efficient development process.

An interesting theory I've learned

Conway's law:

Organizations which design systems (in the broad sense used here) are constrained to produce designs which are copies of the communication structures of these organizations.

We went back and evaluated the trade-offs and potential impacts, and the team reached a compromise that balanced our design vision with organizational goals. To mitigate the downsides of the parallel mode - such as frequent mode switching - I created new interactions, including a contextual button to switch back to the previous step. In addition, we decided to incorporate features of automation/query parameters.

# design system kits

Once the team reached an agreement, I went full throttle into production.

Partnering with design system peers, I published the UI kits on the Figma library. This provided each vertical triad team with a clear and consistent framework for building all upcoming features.

Mode Name

Add

Parent_name

Childern_name

Full height

56

280

40

.75

.75

.75

[Tree_nav]

<left_nav>

<left_panel>

Text

I am canvas

Text

This is an interactive prototype, try click around to check specs.

<canvas>

.75

.25

280

Full height

.75

.75

<right_panel>

Library

Component

Component

Component

Component

Component

Component

Drill in level 1 >

Edit

<error_panel>

36

.5

.5

Full width

56

56

Full width

1.25 rem

1.25 rem

.75

.75

.75

.75

<header>

Inventory management

Preview

Publish

S

Phase Three: Addressing the outstanding issues

Addressing the outstanding issues

TL;DR

# beta-launch and testing

We launched the seven-month-old App Studio in Beta and received a ton of interest.✌️Then, we conducted our first set of public testing sessions.

# The outstanding problem - modes switching

The testers went through the journey as planned, and the biggest pain points we discovered were no suprise - switching modes and using automation parameters.

We found that users understood the concept of the three modes and acknowledged their benefits. However, during hands-on testing, mode switching became more frustrating than expected. More than 90% of users reported getting lost when switching modes and needing to backtrack to where they were working. The reason was surprisingly simple but was overlooked in the design process.

Each time the mode switched, the left panel refreshed, making it difficult for users to quickly locate the asset they needed to focus on.

# ...and Parameter failed too

Additionally, the parameter feature, which was designed to reduce context switching, did not work as intended and instead worsened the situation. The frequency of switching modes increased threefold to complete the task of sending values across modes using parameters, leading to 3x frustration.

To successfully pass data across modes using parameters, users had to switch between modes and configuration panels at least three times.

9 out of 10 testers got lost during these switches.

In case you're curious about what an automation parameter is

(this is how I explained it to my team)

It's similar to what we learned in math class when calculating the distance between home and school:

To calculate the distance, we use the formula:

Velocity × Time = Distance

Parameters

Output

Now, if V = 30 mi/h and T = 0.5 h

D = 30 × 0.5 = 15 mi.

Once you have the formula, you can apply different values to calculate the distance in various situations.

Despite the clear benefit, this approach diverges from the user journey where users aim to complete their tasks with the UI as their end goal.

# reduce Context switching

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

"…how might we improve the usability of switching across modes?"

The problem quickly narrowed down to helping users switch modes smoothly. Without disrupting the fundamental parallel modes structure, I came up with the solutions:

Merge all 3 modes into one page

Instead of listing assets separately under different pages, merging all the assets from the modes into one list could help users navigate quickly and memorize where all assets are located, allowing them to backtrack easily.

Make parameter optional

Instead of requiring parameters to pass data across modes, making them optional and allowing users to pass values via expressions could be extremely helpful.

And yes, App Studio turned into dark theme at this time

# On top of that - it opens more opportunities

Beyond solving the mode switching problem, I discovered that by leveraging the new IA proposal, additional interactions become possible to further enhance the productivity.

Drag & drop across modes

Assets can be dragged and dropped across modes from the left panel.

For example, an entity can be connected to a table component to display data by dragging and dropping the entity onto the component.

Split working canvas

If a user needs to switch to another mode while editing, the canvas will split into two. These two canvases can coexist on the same page, ensuring that no context is lost during the transition.

Overview & Global search

With all assets listed in one place, providing a flowchart to visualize their relationships can be very helpful.

Additionally, a global search can assist users in finding assets faster.

# Another compromise ..(。•ˇ‸ˇ•。)..

I soon brought the ideas into the spotlight, and it was very well received by the team and leadership, earning a high priority.

However, we faced a challenge: hundreds of new features needed development, forcing us to compromise once again. The PM and I had to choose to narrow the scope. Meanwhile, I used this as an opportunity to step back and re-think the user journey and analyze our competitors.

Although initially frustrating, this shift in perspective proved to be a fortunate turn of events.

As I dug deeper into my analysis, it radically changed my perspective later on (Phase 4).

# parameter enhancement

"…how might we improve the usability of switching across modes?"

"…how might we reduce the complexity involved in creating and using parameters?"

Our focus soon shifted to parameters. The goal was to streamline steps and make parameters as accessible as possible within a single mode. I tackled this challenge from two angles: increasing parameter accessibility and revamping the create-to-use steps.

Firstly, I made parameter creation directly on the canvas and within steps, reducing the need to switch panels within automation.

Second, following the notion to make parameter optional,I redesigned the flow to allow users to send values first and decide later if they need to create parameters for reuse. This reduced mode switching and lowered the learning curve, making parameters necessary only for advanced use cases.

These improvements were implemented shortly after the beta launch. We soon received positive feedback from customers and testers, who found it significantly easier to complete app building tasks.

Phase Three: Addressing the outstanding issues

TL;DR

# beta-launch and testing

We launched the seven-month-old App Studio in Beta and received a ton of interest.✌️Then, we conducted our first set of public testing sessions.

# The outstanding problem - modes switching

The testers went through the journey as planned, and the biggest pain points we discovered were no suprise - switching modes and using automation parameters.

We found that users understood the concept of the three modes and acknowledged their benefits. However, during hands-on testing, mode switching became more frustrating than expected. More than 90% of users reported getting lost when switching modes and needing to backtrack to where they were working. The reason was surprisingly simple but was overlooked in the design process.

Each time the mode switched, the left panel refreshed, making it difficult for users to quickly locate the asset they needed to focus on.

# ...and Parameter failed too

Additionally, the parameter feature, which was designed to reduce context switching, did not work as intended and instead worsened the situation. The frequency of switching modes increased threefold to complete the task of sending values across modes using parameters, leading to 3x frustration.

To successfully pass data across modes using parameters, users had to switch between modes and configuration panels at least three times.

9 out of 10 testers got lost during these switches.

In case you're curious about what an automation parameter is

(this is how I explained it to my team)

It's similar to what we learned in math class when calculating the distance between home and school:

To calculate the distance, we use the formula:

Velocity × Time = Distance

Parameters

Output

Now, if V = 30 mi/h and T = 0.5 h

D = 30 × 0.5 = 15 mi.

Once you have the formula, you can apply different values to calculate the distance in various situations.

In the context of App Studio, automation parameters work similarly. They allow you to create a central formula (automation/query) that can be applied to any UI you build.

However, despite the clear benefit, this approach diverges from the user journey where users aim to complete their tasks with the UI as their end goal.

# reduce Context switching

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

"…how might we improve the usability of switching across modes?"

The problem quickly narrowed down to helping users switch modes smoothly. Without disrupting the fundamental parallel modes structure, I came up with the solutions:

Merge all 3 modes into one page

Instead of listing assets separately under different pages, merging all the assets from the modes into one list could help users navigate quickly and memorize where all assets are located, allowing them to backtrack easily.

Make parameter optional

Instead of requiring parameters to pass data across modes, making them optional and allowing users to pass values via expressions could be extremely helpful.

And yes, App Studio turned into dark theme at this time

# On top of that - it opens more opportunities

Beyond solving the mode switching problem, I discovered that by leveraging the new IA proposal, additional interactions become possible to further enhance the productivity.

Drag & drop across modes

Assets can be dragged and dropped across modes from the left panel.

For example, an entity can be connected to a table component to display data by dragging and dropping the entity onto the component.

Split working canvas

If a user needs to switch to another mode while editing, the canvas will split into two. These two canvases can coexist on the same page, ensuring that no context is lost during the transition.

Overview & Global search

With all assets listed in one place, providing a flowchart to visualize their relationships can be very helpful.

Additionally, a global search can assist users in finding assets faster.

# Another compromise ..(。•ˇ‸ˇ•。)..

I soon brought the ideas into the spotlight, and it was very well received by the team and leadership, earning a high priority.

However, we faced a challenge: hundreds of new features needed development, forcing us to compromise once again. The PM and I had to choose to narrow the scope. Meanwhile, I used this as an opportunity to step back and re-think the user journey and analyze our competitors.

Although initially frustrating, this shift in perspective proved to be a fortunate turn of events.

As I dug deeper into my analysis, it radically changed my perspective later on (Phase 4).

# parameter enhancement

"…how might we improve the usability of switching across modes?"

"…how might we reduce the complexity involved in creating and using parameters?"

Our focus soon shifted to parameters. The goal was to streamline steps and make parameters as accessible as possible within a single mode. I tackled this challenge from two angles: increasing parameter accessibility and revamping the create-to-use steps.

Firstly, I made parameter creation directly on the canvas and within steps, reducing the need to switch panels within automation.

Second, following the notion to make parameter optional,I redesigned the flow to allow users to send values first and decide later if they need to create parameters for reuse. This reduced mode switching and lowered the learning curve, making parameters necessary only for advanced use cases.

These improvements were implemented shortly after the beta launch. We soon received positive feedback from customers and testers, who found it significantly easier to complete app building tasks.

Phase Three: Addressing the outstanding issues

TL;DR

# beta-launch and testing

We launched the seven-month-old App Studio in Beta and received a ton of interest.✌️Then, we conducted our first set of public testing sessions.

# The outstanding problem - modes switching

The testers went through the journey as planned, and the biggest pain points we discovered were no suprise - switching modes and using automation parameters.

We found that users understood the concept of the three modes and acknowledged their benefits. However, during hands-on testing, mode switching became more frustrating than expected. More than 90% of users reported getting lost when switching modes and needing to backtrack to where they were working. The reason was surprisingly simple but was overlooked in the design process.

Each time the mode switched, the left panel refreshed, making it difficult for users to quickly locate the asset they needed to focus on.

# ...and Parameter failed too

Additionally, the parameter feature, which was designed to reduce context switching, did not work as intended and instead worsened the situation. The frequency of switching modes increased threefold to complete the task of sending values across modes using parameters, leading to 3x frustration.

To successfully pass data across modes using parameters, users had to switch between modes and configuration panels at least three times.

9 out of 10 testers got lost during these switches.

In case you're curious about what an automation parameter is

(this is how I explained it to my team)

It's similar to what we learned in math class when calculating the distance between home and school:

To calculate the distance, we use the formula:

Velocity × Time = Distance

Parameters

Output

Now, if V = 30 mi/h and T = 0.5 h

D = 30 × 0.5 = 15 mi.

Once you have the formula, you can apply different values to calculate the distance in various situations.

In the context of App Studio, automation parameters work similarly. They allow you to create a central formula (automation/query) that can be applied to any UI you build.

However, despite the clear benefit, this approach diverges from the user journey where users aim to complete their tasks with the UI as their end goal.

# reduce Context switching

"…how might we help builders develop an app easily?"

"…how might we guide builders to successfully create what they want?"

"…how might we improve the usability of switching across modes?"

The problem quickly narrowed down to helping users switch modes smoothly. Without disrupting the fundamental parallel modes structure, I came up with the solutions:

Merge all 3 modes into one page

Instead of listing assets separately under different pages, merging all the assets from the modes into one list could help users navigate quickly and memorize where all assets are located, allowing them to backtrack easily.

Make parameter optional

Instead of requiring parameters to pass data across modes, making them optional and allowing users to pass values via expressions could be extremely helpful.

And yes, App Studio turned into dark theme at this time

# On top of that - it opens more opportunities

Beyond solving the mode switching problem, I discovered that by leveraging the new IA proposal, additional interactions become possible to further enhance the productivity.

Drag & drop across modes

Assets can be dragged and dropped across modes from the left panel.

For example, an entity can be connected to a table component to display data by dragging and dropping the entity onto the component.

Split working canvas

If a user needs to switch to another mode while editing, the canvas will split into two. These two canvases can coexist on the same page, ensuring that no context is lost during the transition.

Overview & Global search

With all assets listed in one place, providing a flowchart to visualize their relationships can be very helpful.

Additionally, a global search can assist users in finding assets faster.

# Another compromise ..(。•ˇ‸ˇ•。)..

I soon brought the ideas into the spotlight, and it was very well received by the team and leadership, earning a high priority.

However, we faced a challenge: hundreds of new features needed development, forcing us to compromise once again. The PM and I had to choose to narrow the scope. Meanwhile, I used this as an opportunity to step back and re-think the user journey and analyze our competitors.

Although initially frustrating, this shift in perspective proved to be a fortunate turn of events.

As I dug deeper into my analysis, it radically changed my perspective later on (Phase 4).

# parameter enhancement

"…how might we improve the usability of switching across modes?"

"…how might we reduce the complexity involved in creating and using parameters?"

Our focus soon shifted to parameters. The goal was to streamline steps and make parameters as accessible as possible within a single mode. I tackled this challenge from two angles: increasing parameter accessibility and revamping the create-to-use steps.

Firstly, I made parameter creation directly on the canvas and within steps, reducing the need to switch panels within automation.

Second, following the notion to make parameter optional,I redesigned the flow to allow users to send values first and decide later if they need to create parameters for reuse. This reduced mode switching and lowered the learning curve, making parameters necessary only for advanced use cases.

These improvements were implemented shortly after the beta launch. We soon received positive feedback from customers and testers, who found it significantly easier to complete app building tasks.

Phase Four: Intention first at the age of GenAI

Intention first at the age of GenAI

TL;DR

# Builder > Team building

Finishing the competitor analysis mentioned above, I uncovered a significant insight: enterprise-facing products typically have a structure similar to ours, whereas small business products do not. This brought Conway's Law to mind, leading me to realize that we needed to revise our persona to reflect a team-building structure where individuals use different modes simultaneously.

This prompted me to question whether we were solving the right problem.

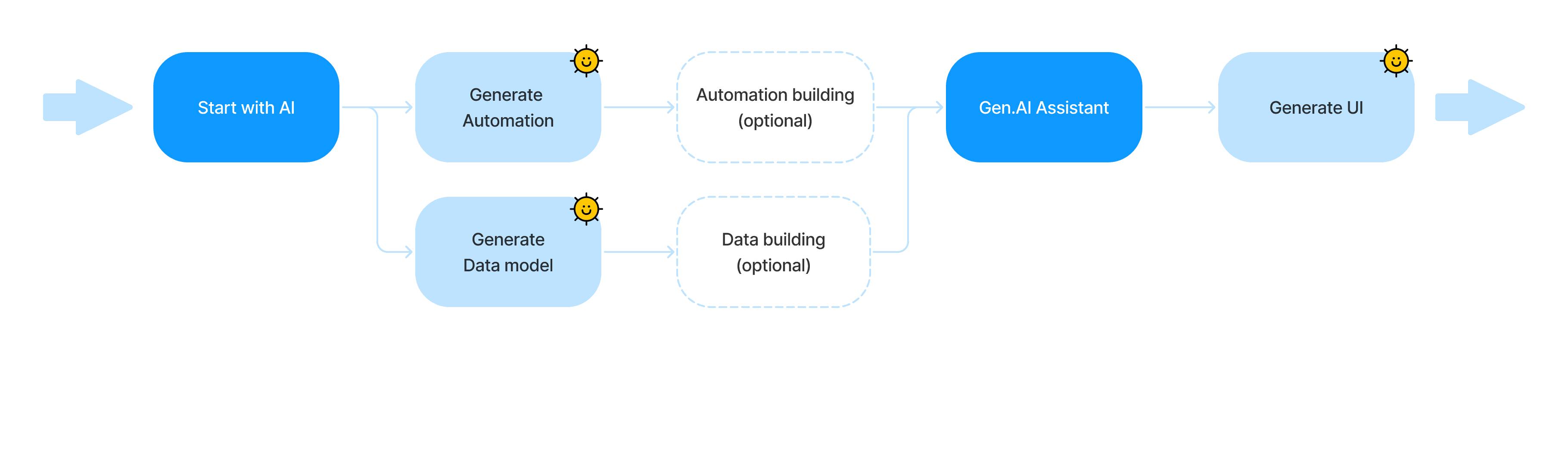

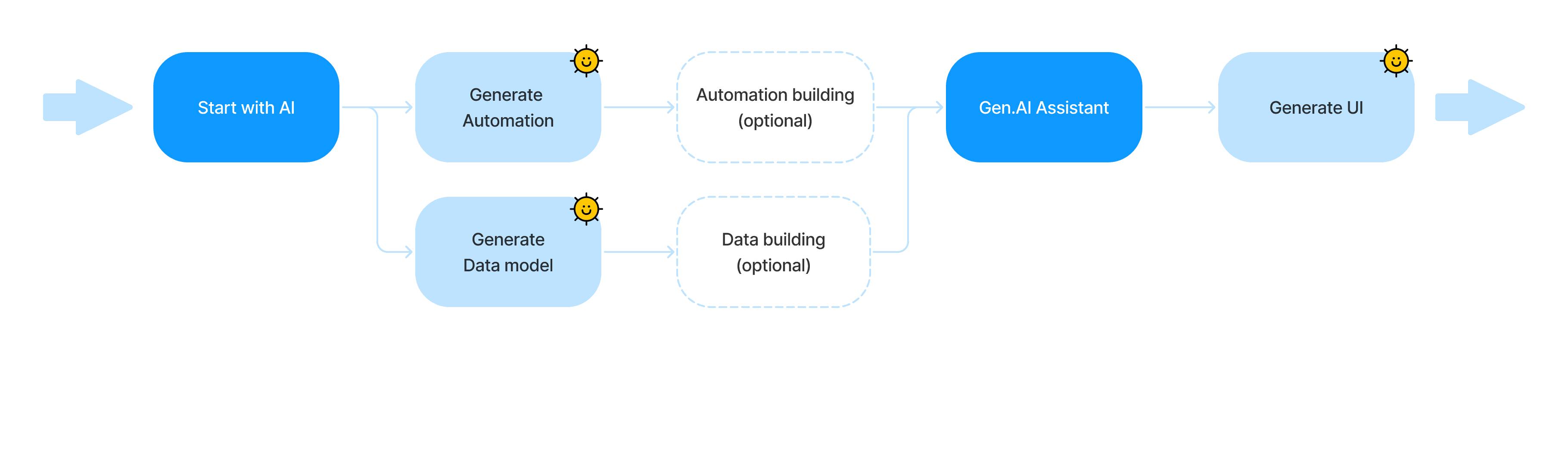

# The Emergence of GenAI

At the same time, with the trend of everything turning to neon gradients, AI has become an unavoidable topic for all of us, and App Studio is no exception. Inspired by the simple textbox AI interaction, I recognized a critical pitfall:

Users’ goals are not just to build an app, but to create solutions that address their team's problems.

Users’ goals are not just to build an app, but to create solutions that address their team's problems.

# The GenAI Tool Paradox

To adopt an AI-first journey, we faced an "unsolvable" constraint: how do we allow user to ensure the output (end app) meets all the requirements, hence to build out trust?

Although UI previewing is just one click away, it doesn’t guarantee that the automation and data model are precise.

# AI > Intention > UI

Working on the automation constantly, the answer was clear to me - automation. Users communicate their problem-solving methods to the system through the automation workflow and data model via various functions.

This realization was an A-ha moment that everything aligned perfectly now.

# REefining user's goal and journey - connective tissue

I shared my analysis and new perspective with the team, which was widely accepted. In fact, many of us had been considering similar ideas. Along with the new AI-first direction, I revisited the original problem we were trying to solve and revised the story we been telling.

"…how might we guide builders to successfully create what they want?"

"……

Back to the original problem statement

This time, we aimed to promote an automation-first journey and make modes connected. The goal was to introduce a linear user journey with upstream (automation and data) and downstream (UI) components. Here’s a rough outline of how it looks:

The new journey also mitigates parameter complexity and (not by designed) addresses a problem the UI team faced: "The customers often have no idea where to start, throwing elements onto virtual canvas is a common but less purposeful first step."

Now by guiding users to think about business logic and requirements first, the UI could be generated from those inputs.

# minimal updates

As we approached the original launch date, we decided to take a small step by adding pre-packed Create/Read/Update/Delete automations. This allowed users to easily select a function and connect it to a compatible UI component immediately after integrating data from AWS services.

Additionally, AI would be involved in each transaction to ensure that intentions were well translated into a usable interface.

As we approached the original launch date, we decided to take a small step by adding pre-packed Create, Read, Update, Delete automations. This allowed users to easily select a function and connect it to a compatible UI component immediately after integrating data from AWS services.

Additionally, AI would be involved in each transaction to ensure that intentions were well translated into a usable interface.

# Future proofing - AI-dominated journey

Knowing the gap between our current state and our ultimate vision, I began exploring a comprehensive layout and IA that better aligns the automation/data-first flow. This preparation was crucial for the extensive feedback we anticipated post-public launch.

As I write this case study, the new layout concept is still under development and testing.

Phase Four: Intention first at the age of GenAI

TL;DR

# Builder > Team building

Finishing the competitor analysis mentioned above, I uncovered a significant insight: enterprise-facing products typically have a structure similar to ours, whereas small business products do not. This brought Conway's Law to mind, leading me to realize that we needed to revise our persona to reflect a team-building structure where individuals use different modes simultaneously.

This prompted me to question whether we were solving the right problem.

# The Emergence of GenAI

At the same time, with the trend of everything turning to neon gradients, AI has become an unavoidable topic for all of us, and App Studio is no exception. Inspired by the simple textbox AI interaction, I recognized a critical pitfall:

Users’ goals are not just to build an app, but to create solutions that address their team's problems.

# The GenAI Tool Paradox

To adopt an AI-first journey, we faced an "unsolvable" constraint: how do we allow user to ensure the output (end app) meets all the requirements, hence to build out trust?

Although UI previewing is just one click away, it doesn’t guarantee that the automation and data model are precise.

# AI > Intention > UI

Working on the automation constantly, the answer was clear to me - automation. Users communicate their problem-solving methods to the system through the automation workflow and data model via various functions.

This realization was an A-ha moment that everything aligned perfectly now.

# REefining user's goal and journey - connective tissue

I shared my analysis and new perspective with the team, which was widely accepted. In fact, many of us had been considering similar ideas. Along with the new AI-first direction, I revisited the original problem we were trying to solve and revised the story we been telling.

"…how might we guide builders to successfully create what they want?"

"……

Back to the original problem statement

This time, we aimed to promote an automation-first journey and make modes connected. The goal was to introduce a linear user journey with upstream (automation and data) and downstream (UI) components. Here’s a rough outline of how it looks:

View old user journey

AI-Driven Flow. 2024

The new journey also mitigates parameter complexity and (not by designed) addresses a problem the UI team faced: "The customers often have no idea where to start, throwing elements onto virtual canvas is a common but less purposeful first step."

Now by guiding users to think about business logic and requirements first, the UI could be generated from those inputs.

# minimal updates

As we approached the original launch date, we decided to take a small step by adding pre-packed Create/Read/Update/Delete automations. This allowed users to easily select a function and connect it to a compatible UI component immediately after integrating data from AWS services.

Additionally, AI would be involved in each transaction to ensure that intentions were well translated into a usable interface.

# Future proofing - AI-dominated journey

Knowing the gap between our current state and our ultimate vision, I began exploring a comprehensive layout and IA that better aligns the automation/data-first flow. This preparation was crucial for the extensive feedback we anticipated post-public launch.

As I write this case study, the new layout concept is still under development and testing.

Phase Four: Intention first at the age of GenAI

TL;DR

# Builder > Team building

Finishing the competitor analysis mentioned above, I uncovered a significant insight: enterprise-facing products typically have a structure similar to ours, whereas small business products do not. This brought Conway's Law to mind, leading me to realize that we needed to revise our persona to reflect a team-building structure where individuals use different modes simultaneously.

This prompted me to question whether we were solving the right problem.

# The Emergence of GenAI

At the same time, with the trend of everything turning to neon gradients, AI has become an unavoidable topic for all of us, and App Studio is no exception. Inspired by the simple textbox AI interaction, I recognized a critical pitfall:

Users’ goals are not just to build an app, but to create solutions that address their team's problems.

# The GenAI Tool Paradox

To adopt an AI-first journey, we faced an "unsolvable" constraint: how do we allow user to ensure the output (end app) meets all the requirements, hence to build out trust?

Although UI previewing is just one click away, it doesn’t guarantee that the automation and data model are precise.

# AI > Intention > UI

Working on the automation constantly, the answer was clear to me - automation. Users communicate their problem-solving methods to the system through the automation workflow and data model via various functions.

This realization was an A-ha moment that everything aligned perfectly now.

# REefining user's goal and journey - connective tissue

I shared my analysis and new perspective with the team, which was widely accepted. In fact, many of us had been considering similar ideas. Along with the new AI-first direction, I revisited the original problem we were trying to solve and revised the story we been telling.

"…how might we guide builders to successfully create what they want?"

"……

Back to the original problem statement

This time, we aimed to promote an automation-first journey and make modes connected. The goal was to introduce a linear user journey with upstream (automation and data) and downstream (UI) components. Here’s a rough outline of how it looks:

View old user journey

AI-Driven Flow. 2024

The new journey also mitigates parameter complexity and (not by designed) addresses a problem the UI team faced: "The customers often have no idea where to start, throwing elements onto virtual canvas is a common but less purposeful first step."

Now by guiding users to think about business logic and requirements first, the UI could be generated from those inputs.

# minimal updates

As we approached the original launch date, we decided to take a small step by adding pre-packed Create/Read/Update/Delete automations. This allowed users to easily select a function and connect it to a compatible UI component immediately after integrating data from AWS services.

Additionally, AI would be involved in each transaction to ensure that intentions were well translated into a usable interface.

# Future proofing - AI-dominated journey

Knowing the gap between our current state and our ultimate vision, I began exploring a comprehensive layout and IA that better aligns the automation/data-first flow. This preparation was crucial for the extensive feedback we anticipated post-public launch.

As I write this case study, the new layout concept is still under development and testing.

The launch

Launch at AWS summit

In Jul. 2024, App Studio was officially launched and announced publicly at the AWS Summit.

Deloitte, a leading provider of audit and assurance, consulting, financial advisory, risk advisory, tax, and related services

"App Studio can help our technical employees build secure, scalable applications in minutes that streamline tasks and increase operational efficiency. As a global provider working with brands from all around the world across various industries, our teams need to operate across a diverse set of clients that all have distinct tasks and processes. With App Studio, our technical employees are able to take charge and easily go from idea to application in just a few sentences, streamlining these activities for the entire team. As teams grow and business goals change, App Studio applications can efficiently scale and shift to our employees’ needs, helping us better deliver for our clients."

First week

Sign up customers

Sorry.

For confidentiality reasons I have omitted the actual values for the metrics.

First week

Active user

Sorry.

For confidentiality reasons I have omitted the actual values for the metrics.

First week

Apps built

Sorry.

For confidentiality reasons I have omitted the actual values for the metrics.

First week

Received preview requests

Sorry.

For confidentiality reasons I have omitted the actual values for the metrics.

Customer feedback

Customer improved efficiency

> 20%

Unable to count

Qs in #App-Studio-Interest

A loooot...

Designed in collaboration with Fran Quinn, Annie Xu, Yawen Wang, Darren Luvaas. Research partnered with Solee Shin.

Design and content © Sicheng Lu 2024